Resilient, Decentralized, and Privacy-Preserving Machine Learning

Decentralized AI for robust, private, and personalized services

Context

AI systems are increasingly pervasive, driven by centralized paradigms reliant on large-scale data aggregation. These approaches often raise privacy, robustness, and scalability concerns. The REDEEM project addresses these limitations by advancing decentralized learning approaches to empower individuals and organizations with privacy-preserving, resilient, and efficient AI.

Objectives

The REDEEM project targets foundational innovations in decentralized machine learning, organized into three primary objectives:

- Algorithmic Exploration in Adversary-Free Environments

- Development of novel optimization paradigms and decentralized algorithms for large-scale and unsupervised tasks.

- Addressing dynamic networks and heterogeneity in computational resources and data distributions.

- Resilience to Adversarial Attacks

- Investigating privacy-preserving methods like adaptive differential privacy and encryption.

- Developing Byzantine-resilient algorithms and game-theoretic approaches to manage selfish behaviors.

- Integration and Validation in Real-World Settings

- Studying trade-offs between resilience, performance, and privacy under practical constraints.

- Delivering open-source tools and demonstrators for scalable decentralized learning.

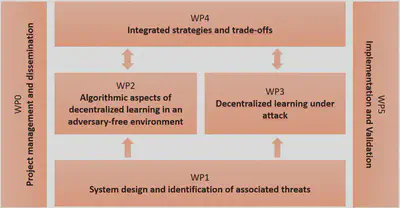

Work Package Structure

- WP0: Project management and dissemination.

- WP1: System design, threat identification, and evaluation frameworks.

- WP2: Algorithmic aspects in adversary-free decentralized learning.

- WP3: Mitigation of privacy and Byzantine attacks in decentralized learning.

- WP4: Integrated strategies and theoretical trade-offs.

- WP5: Implementation of efficient prototypes and large-scale validation.

Targeted Innovations

- Development of decentralized learning protocols robust to privacy breaches and malicious nodes.

- Efficient training algorithms for extremely large models distributed across peers.

- Personalized learning strategies to cater to diverse user needs.

Anticipated Impacts

- Creation of open-source tools and libraries for privacy-preserving machine learning.

- Contributions to European AI standards on decentralized and secure learning systems.

- Empowerment of SMEs, citizens, and regulators in the AI ecosystem.

Key References

- Scaman, K., et al. (2019). “Optimal Convergence Rates for Convex Distributed Optimization,” Journal of Machine Learning Research.

- Sabater, C., et al. (2023). “Private Sampling with Identifiable Cheaters,” PETS.

- European Commission (2020). White Paper on Artificial Intelligence.

- Dieuleveut, A., et al. (2022). “Differentially Private Federated Learning on Heterogeneous Data,” AISTATS.

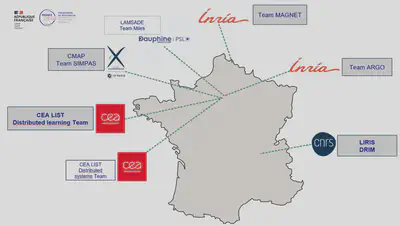

Consortium Partners

- CNRS (LIRIS DRIM Team): Expertise in resilient and privacy-preserving distributed systems.

- CEA (Distributed AI Team): Focus on adversarial robustness and privacy-enhanced learning.

- INRIA MAGNET Team: Contributions to decentralized optimization and privacy-preserving techniques.

- Ecole Polytechnique (SIMPAS Team): Specialized in optimization under privacy and communication constraints.

- INRIA ARGO Team: Focused on theoretical analysis and scalable decentralized algorithms.

- CEA (Distributed Systems Team): Focus on distributed consensus algorithms.

- LAMSADE (Miles Team, associated team without any budget): Game theory and machine learning.

For more information, follow our updates and explore the project’s results as they unfold!